No matter what you heard, size matters. Specially in the world of AI models, having a smaller and more affordable model is the key to win the competition. This is why Microsoft even invested time, GPU and money on Phi project, which is a Small Language Model or SLM for short.

In this post, I represent Nucleus. My newest language model project, which is based on Mistral (again) and has 1.13 billion parameters. And of course, this post will have a s*it ton of reference to HBO’s Silicon Valley series 😁

Background

If you know me, you know that I have a good background in messing around with generative AI models such as Stable Diffusion, GPT-2, LLaMa and Mistral. I even tried to do something with BLOOM (here) before but since the 176B model is too expensive to be put in the mix, I left it behind.

But later, I started my own AI image generation platform called Mann-E, and in previous weeks, my team delivered Maral, which is a 7 billion parameters language model specializing in Persian language.

After observing the world of smaller but more specific language models (should we call them SMBMLMs now?) like Phi, and also after observing the release of TinyLLaMa, I just started a journey to find how can I stay loyal to Mistral models but make them smaller.

You know, since the dawn of time, the mankind tried to make things smaller. Like smaller cars, smaller homes, smaller computers and now smaller AI models!

Basic Research

In my journey, I just wanted to know if someone ever bothered to make a smaller version of Mistral or we have to go through the whole coding procedure ourselves.

Lucky us, I could find Mistral 1B Untrained on HuggingFace and even asked the author a few questions about the model. As you can see, they’re not really okay with the model but I saw the potential. So I decided to keep this model in my arsenal of small models for research.

Then, I searched for datasets, and sparks started in my head about how can I make the damn thing happen!

The name and branding (and probably Silicon Valley references)

The name Nucleus comes from HBO’s Silicon Valley. Which is by far my most favorite shows of all time. If you remember correctly, Hooli CEO, Gavin Belson had something to do to piss Richard off, right? So he made Nucleus. But his Nucleus was bad. I tried to make mine better at least 😁

Since we know it’s time to pay the piper, let’s waste less time and jump right into the technical details of the project.

Pre-Training

Since the model claimed to be untrained we can understand that it only knows what the language is right? Even if now you try to infer the model on HuggingFace or locally, you may get a huge sequence of letters with no meaning at all.

So our first task was to pretrain that. Pretraining the model was quiet easy using a 3090 and spending 40 hours. It was done on the one and only TinyStories dataset.

Actually this dataset is great for pre-training and giving the base models the idea of the language and linguistic structures. It does it pretty well. Although since it only has 2 million rows, you have to expect huge over-fitting which can be easily fixed trough fine tuning the model.

Training on Tiny Textbooks

Well, the whole point of Phi 1 was that textbooks are all you need and since Microsoft doesn’t like to share their dataset with us, we had to perform a huge research on available options.

The very first option coming to my mind was using GPT-4 to generate textbooks but it could be astronomical minding that we are not funded and spending a few thousand dollars on a dataset? no thanks.

So during this research procedure, we discovered Tiny Textbooks dataset. Apparently Nam Pham did a great thing. They crawled the web and made it to textbooks. So kudos to them for letting us use their awesome dataset.

Okay, fine tuning called for another 40 hours of training, and it was fine. After fine-tuning for two epochs and 420k steps each, we’ve got the best results we could get.

Results

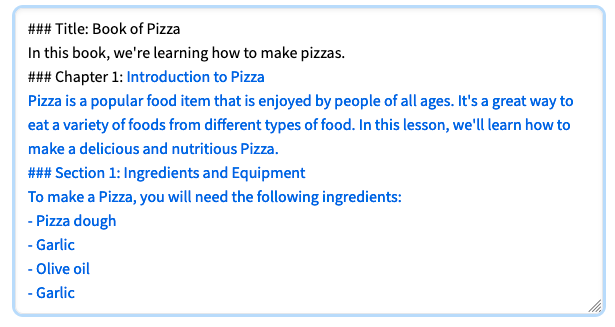

On TinyStories, the model really loved telling stories about Lily and there was no surprise for me at least. But on Tiny Textbooks, the model did a great job. Okay, this is just the result when I asked for a pizza recipe:

And as you can see, it’s basically what HuggingFace offers. With a little bit of settings, you easily can get good results out of this baby!

But sadly it still sucks at two things (which are basically what make you even click on an LLM-related link). First is question-answering (or instruction following) which is not surprising and second which made me personally sad is coding since I am a developer and I like a well-made coding assistant.

But in general, it can be competing with other well known models I guess. it all depends on what we train the model on, right?

But it still needs more effort and training, so we are heading to the next section!

License

If you know me from the past, you know I love permissive licenses. So this model is licensed and published under MIT license. You can use it for commercial use without any permission from us.

Further changes and studies

- The model does well on English. But what about more languages? My essential mission is to try to make it work with Persian language.

- It is good at generation of textbooks and apparently loves food recipes and history lessons. But it needs more. Maybe code textbooks are fine.

- The model should be trained on pure code (StableCode style) and also code-instruct style (I haven’t seen models like that or maybe because I am too lazy to not check all the models).

- The model should be trained on a well-crafted instruct-following dataset. For me personally, it is OpenOrca. What do you suggest?

Links

- HuggingFace: https://huggingface.co/NucleusOrg/Nucleus-1B-alpha-1

- GitHub (Colab Notebooks): https://github.com/prp-e/nucleus

Donations are appreciated

If you open our github repository, you will find a few crypto wallets. Well, we appreciate donations to the projects, because we’re still not funded and we’re waiting for investors’ responses.

These donations help us keep the project up, make content about them and spread the word for Free/Libre and Open Source Software or FLOSS!

Conclusion

In the world where people get excited about pretty much every react js app wrapped around OpenAI’s Chat API and call it a new thing, or companies try to reinvent the iPod with the power of ChatGPT, and also make a square shaped iPod Touch, new models are the key to keep our business up.

But you know, if models are still huge and you can’t run them locally, this will call for more and more proprietary stuff where you have no control over the data and you may end up giving up the confidential data of your company to a third party.

Open source small language models or Open SLMs, are the key to have a better world. You easily can run this model on a 2080 (or even less powerful GPU) and you know what it means? Consumer hardware can have access to good AI stuff.

This is where we are headed in 2024, a new year of awesomeness with open models, regardless of their size.