In the previous post I mentioned that I could not get LLaMA Mesh to work, right? So I could and in this particular post, I am going to explain what happened and why LLaMA Mesh is not a good option at all.

First, I will explain the workflow of the model’s deployment. Because I think it is important to know the flow. Then, I will tell you what I asked it and why I am very disappointed in this model (although I thought it might be a promising one).

The Flow

In this part, I’m explaining what flows I chose in order to make LLaMA Mesh work. First flow I chose was an absolute failure, but this morning I was thinking about every place I could host a custom model, so I managed to deploy and test the model and pretty much get disappointed.

The failed flow

First, I paid a visit to my always goto website RunPod and tried to use their serverless system and deploy the model using vLLM package. I explained this in the previous post.

First, it didn’t work and I decided to go with a quantized version. It didn’t work either. I know if I could spend a few hours on their website, I’d be successful in terms of running the model but to be honest, it wasn’t really a priority for me at the moment.

The second failure

This wasn’t quite a failure tough. After I couldn’t deploy the model in one possible way I knew, I just headed over to Open Router. I guessed they may have the model but I was wrong.

I also didn’t surrender here. I paid a visit to Replicate as well. When I was there, I noticed there are good models labeled as 3D but non of them are LLaMA Mesh, my desired one.

The Successful One

Well after a few unsuccessful tests, I was thinking of Google Colab. But I remembered that their free tier subscription is not suitable for eight billion parameter models which are not quantized.

What is another option then? Well it all is because of an email I received this morning. I was struggling to wake up as usual and I saw my phone vibrating. I picked my phone up and saw an email from GLHF. They have a quite good bunch of models on their always on mode and also they let you run your own models (if hosted on hugging face) and then I decided to go with them!

The Disappointment

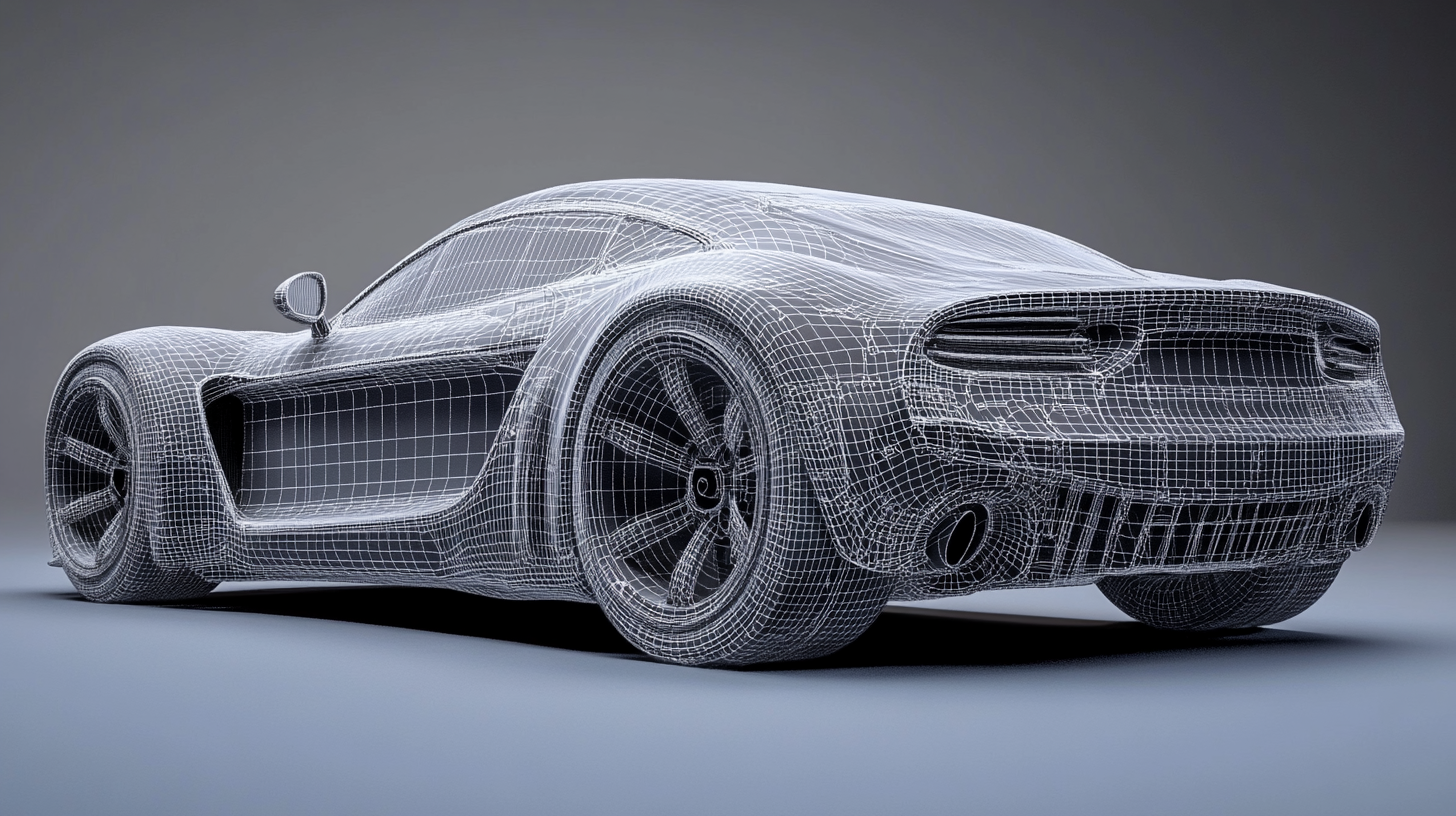

Now, this is the time I’m going to talk about how disappointed I got when I saw the results. The model is not really different from other LLMs I covered in the previous post and just had one advantage: quantization in the output 3D objects.

The integer quantization however is just good for speeding up the generation and make the output a little more “lowpoly”. Otherwise the final results were good only if you asked for basic shapes such as cubes or pyramids.

Should we rely on LLMs for 3D mesh generation at all?

Short answer is No. Long answer is that we need to work more on the procedures, understand formats more and then try to work on different formats and ways of generating 3D meshes.

Mesh generation in general is only one problem. We also have problems such as polishing and materializing the output 3D object which can’t be easily done by a large language model.

What’s next?

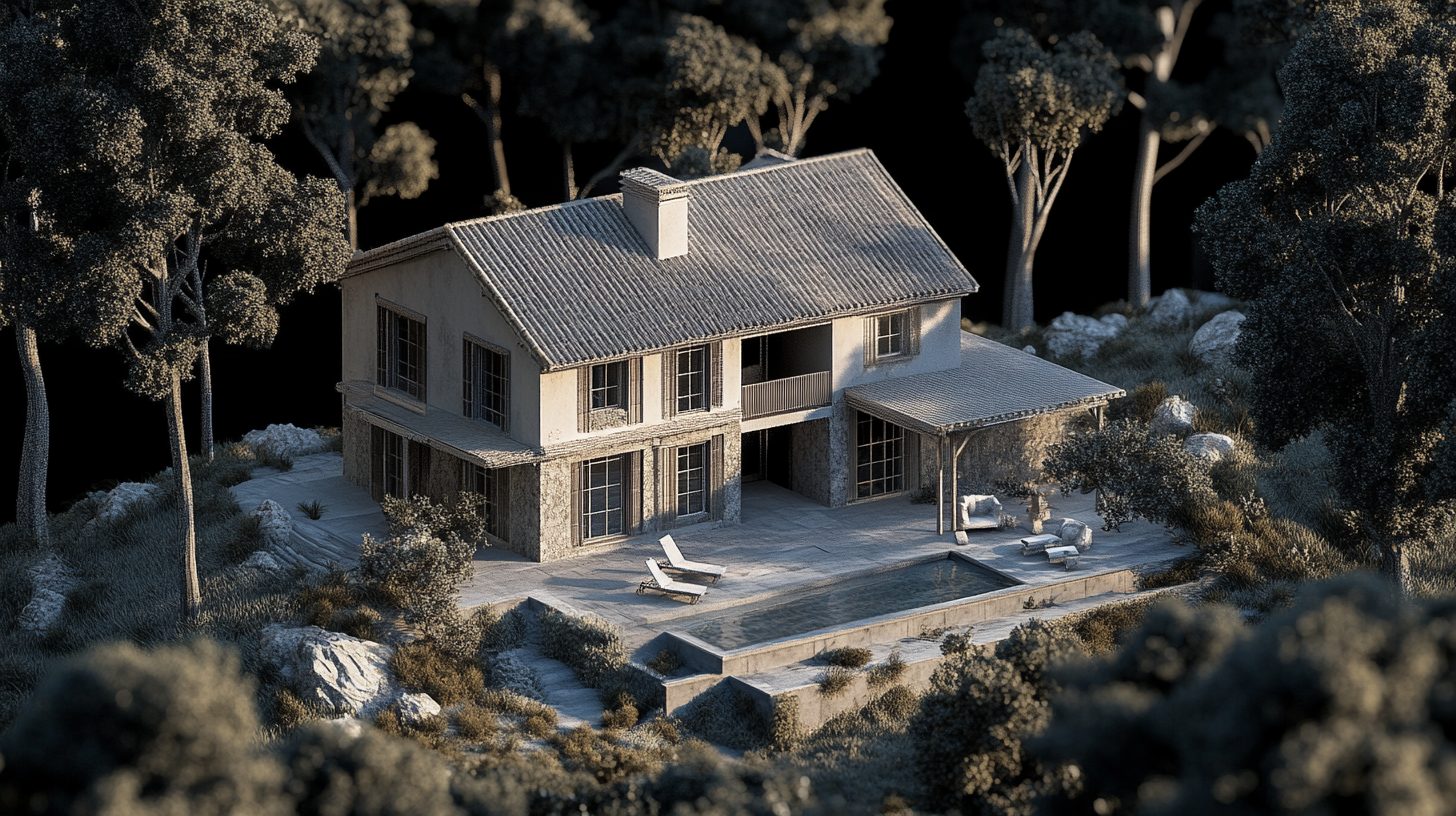

Now, I’m more confident about the idea I discussed before. Taking existing image models, fine tune them on 3D objects and use an existing image to 3D model in order to make the 3D objects needed.

But I have another problem, what happens when we generate items and not having a place to put them? So for now I guess we need a world generator system which we should be thinking about.