In the previous post about building metaverse with AI, we discussed different possibilities and AI models we can access in order to make the virtual world. Although I personally am a big fan of 2D worlds, but let’s be honest, a 2D world is basically a perfect choice for a low budget indie game and nothing more.

In this post, I am going to talk about different models and ways I found about making 3D objects from text or image inputs. It was a fun experiment and I guess it’s worth sharing with the outside world in form of a blog post.

My discoveries

The very first thing I want to discuss is about my own discoveries in the field of 3D generation using AI. I always wondered what are 3D objects? And I got my answer.

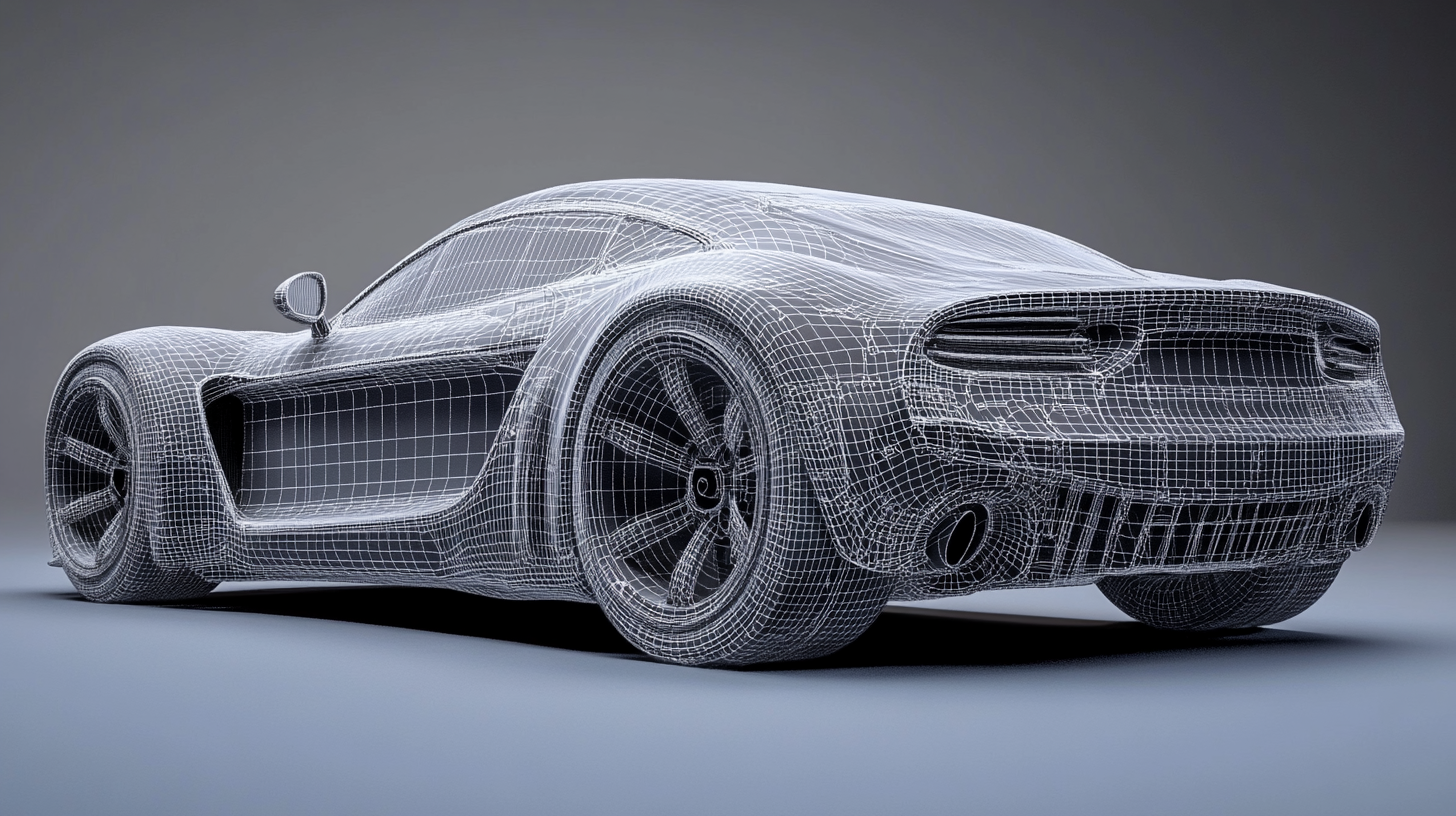

The simplest way of discovering this was that make different 3D files using a 3D creation/editing tool such as Blender and do further investigation on the outputs. While working with different files, I discovered OBJ files are just simple text based explanations of the vertices and dots forming a shape.

Also, recently I found out about a research paper called LLaMA mesh. If I want to make it short, I should say that these people found out that LLaMA models are capable of generating OBJ files, then they fine-tuned the model further on 3D and OBJ files data in order to make the model better in making more coherent results when asked about 3D obj files.

Well, in order to find out the best metaverse base model, I just did a bunch of tests on different models and here, I am explaining every single test I’ve done.

Models I’ve tested

ChatGPT

Yes. ChatGPT is always my first goto for AI specially when it’s about text. Since OBJ files are basically text files with information about the desired shape, I made a stop on ChatGPT’s website and tested its capabilities in making 3D objects.

I used GPT-4o mini, GPT-4o and o1 models. They have understandings of the OBJ creation, but this understanding was very basic. The best shape I could get from OpenAI’s flagship models was just a simple cube. Which you don’t need any design skill to make in different 3D design programs.

Claude

Anthropic’s Claude, was nothing better than ChatGPT. I personally got much better code output from this model in the past and I had it in mind that this model will perform better in case of code generation.

But I was wrong. I still couldn’t get anything better than basic shapes from this one as well. Cubes, Cylinders or Pyramids. These shapes aren’t really complicated and even without any 3D design knowledge, you can make them since blender, 3ds max, maya, etc. all have them as built-in tools.

LLaMA

Since I read the paper and understood that this whole game of LLaMA Mesh has started since the researches found out LLaMA is capable of generating 3D OBJ files. It wasn’t surprising for me, since LLaMA models are from Meta and Meta is the company starting the whole metaverse hype.

In this particular section, I’m just talking about LLaMA and not the fine-tune. I used 8B, 70B, 1B, 3B and 405B models from 3.1 and 3.2 versions. Can’t see they performed better in order to generate the results, but they showed a better understanding which was really hopeful for me.

At the end of the day, putting their generations in test, again I got the same result. These models were great when it comes to basic shapes and when it gets more complicated, the model seems to understand, but the results are far from acceptable.

LLaMA Mesh

I found an implementation of LLaMA Mesh on huggingface which can be accessed here. But unfortunately, I couldn’t get it to work. Even on their space on HF, the model sometimes stops working without any errors.

It seems due to high traffic this model can cause, they limited the amount of requests and tokens you can get from the model and this is the main cause of those strange errors.

The samples from their pages seem so promising, and of course we will give this model the benefit of the doubt.

Image to 3D test

Well as someone who’s interested in image generation using artificial intelligence, I like the image to 3D approach more than text to 3D. Also I have another reason for this personal preference.

Remember the first blog post of this series when I mentioned that I was a cofounder at ARmo? One of the most requested features from most of our customers was this we give you a photo of our product and you make it 3D. Although we got best 3D design experts to work, it was still highly human dependent and not scalable at all.

Okay, I am not part of that team anymore, but it doesn’t mean I don’t care about scalability concerns in the industry. Also, I may be working in the same space anytime.

Anyway, In this part of the blog post, I think I have to explain different image generators I used for finding out what models have the best results.

Disclaimer: I do not put example images here, just explain about the behavior of the model. The image samples will be uploaded in the future posts.

Midjourney

When you’re talking about AI image generation, the very first name people usually mention is Midjourney. I personally use it a lot for different purposes. Mostly comparing with my own model.

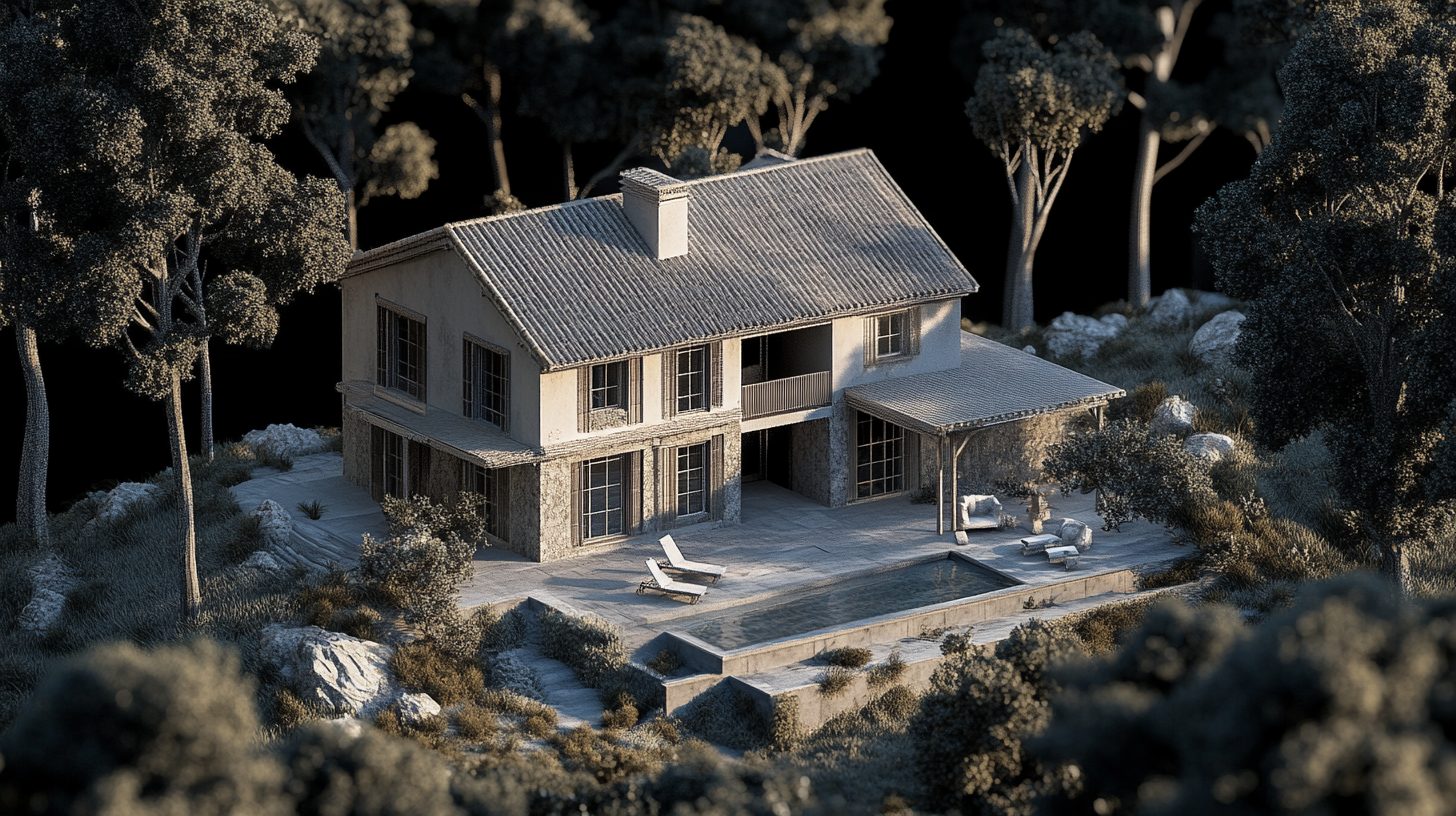

In this case, with the right prompting and right parameters, it made pretty good in app screenshots of 3D renders. Specially my most favorite one “lowpoly”. Although I still need more time and study to make it better.

Dall-E

Not really bad, but has one big down side. You cannot disable prompt enhancement in this model while using it. This basically made me put Dall-E out of the picture.

Ideogram

It is amazing. Details and everything is good, you can turn prompt enhancement off, you can tune different parameters, but still has problems in understanding the background colors. This was the only problem I could face with this model.

Stable Diffusion XL, 3 and 3.5

SD models perform really well, but you need to understand how to use them. Actually when it comes to XL or 1.5, you must have a big library of LoRA adapters, text embeddings, controlnets, etc.

I am not interested in 3 or 3.5 models that much but without any special addition, they perform well.

Something good about all stable diffusion models is that all of them are famous for being coherent. Specially the finetunes. So something we may consider for this particular project might be a finetune of SD 1.5 or XL as well.

FLUX

FLUX has good results, specially when using Ultra model. There are a few problems with this model (mostly licensing) and also sometimes, it loses its coherency. I don’t know how to explain this, it seems like the times you press the brake pedal but it doesn’t stop your car and there’s nothing wrong with the brake system.

Although it has these problems, seemed to be one of the best options for generating images of 3D renders. It still needs more study.

Mann-E

Well, as the founder and CEO of Mann-E, I can’t leave my own platform behind! But since our models are mostly SDXL based, I guess the same goes here. Anyway, I performed the test on all of our 3 models.

I have to say it is not really any different from FLUX or SD, and the coherency is somehow stable. What I have in mind is basically a way to fine tune this model in order to generate better render images of 3D objects.

Converting images to 3D objects

I remember almost two years ago, we used a technique called photogrammetry in order to make 3D objects from photos. It was a really hard procedure.

I remember we needed at least three cameras in three different angles, a turning table and some sort of constant lighting system. It needed its own room, its own equipment and wasn’t really affordable for a lot of companies.

It was one step forward in making our business scalable but it also was really expensive. Imagine just making a 3D model of a single shoe, takes hours of photography with expensive equipment. No, it’s now what I want.

Nowadays, I am using an artificial intelligence system called TripoSR which can convert one single image to a 3D object. I tested it and I guess it has potentials. I guess we have one of our needed ingredients in order to make this magical potion of metaverse.

Now we need to make a way for building the metaverse using AI.

What’s next?

It is important to find out what is next. In my opinion, the next step is to find a way to make image models perform better in terms of generating 3D renders. Also, designing a pipeline for image to 3D is necessary.

Also, for now I am thinking of a different thing. You enter the prompt as a text, it generates images, images fed to TripoSR and then we have 3D models we need.

I guess the next actual step will be finding potentials of the universe/world generation by AI!